Here’s how human-like bots perform online fraud

In 2019, login pages were the prime target of fraudsters across different verticals. They were using bad bots to carry out two types of online fraud: account takeover to steal PII and payment card details; and fake account creation to validate stolen payment card details (carding attacks) or cash out stolen cards.

For online businesses and their customers, the growing threat of online fraud is a real concern. With stringent regulations on data and privacy such as GDPR and CCPA, online fraud is not just a business issue, but also a legal challenge. For many organisations, a data breach means ceasing to exist due to massive fines under new data security regulations.

The real challenge here is not weak data and payment security opted by the organisation. With every measure online merchants take to tighten security and thwart malicious activities, cyber criminals seem to up their game and outwit them. Online businesses today are faced with a tireless legion of bots that can bypass security defences to perform fraud.

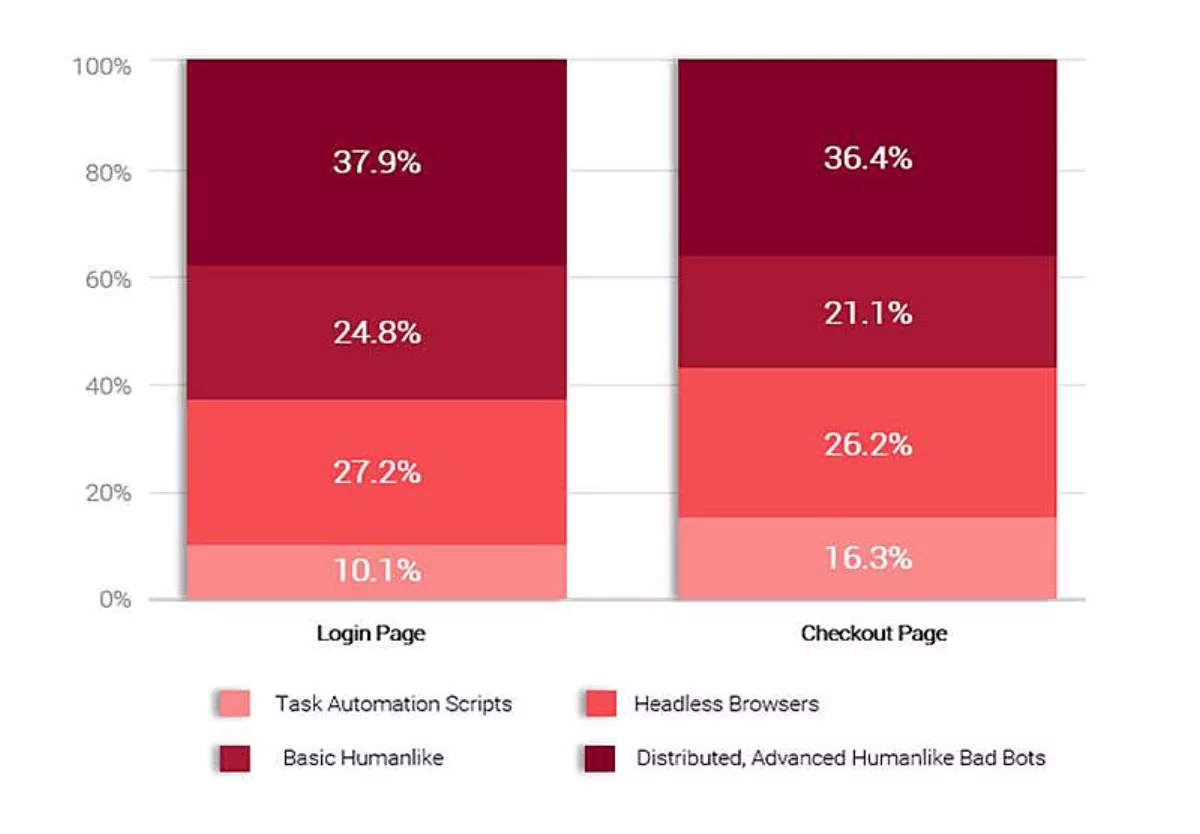

The bad bots that perform online frauds are highly sophisticated and can mimic human behaviour. According to the Big Bad Bot Problem 2020 report, 62.7% of bad bots on the login page can mimic human behaviour. That means these bots can take over user accounts or can even create fake accounts to perform carding or cashing out attacks. Similarly, 57.5% of bad bots on the checkout page can simulate human behaviour when performing carding attacks.

Behaviour of bad bots by generation

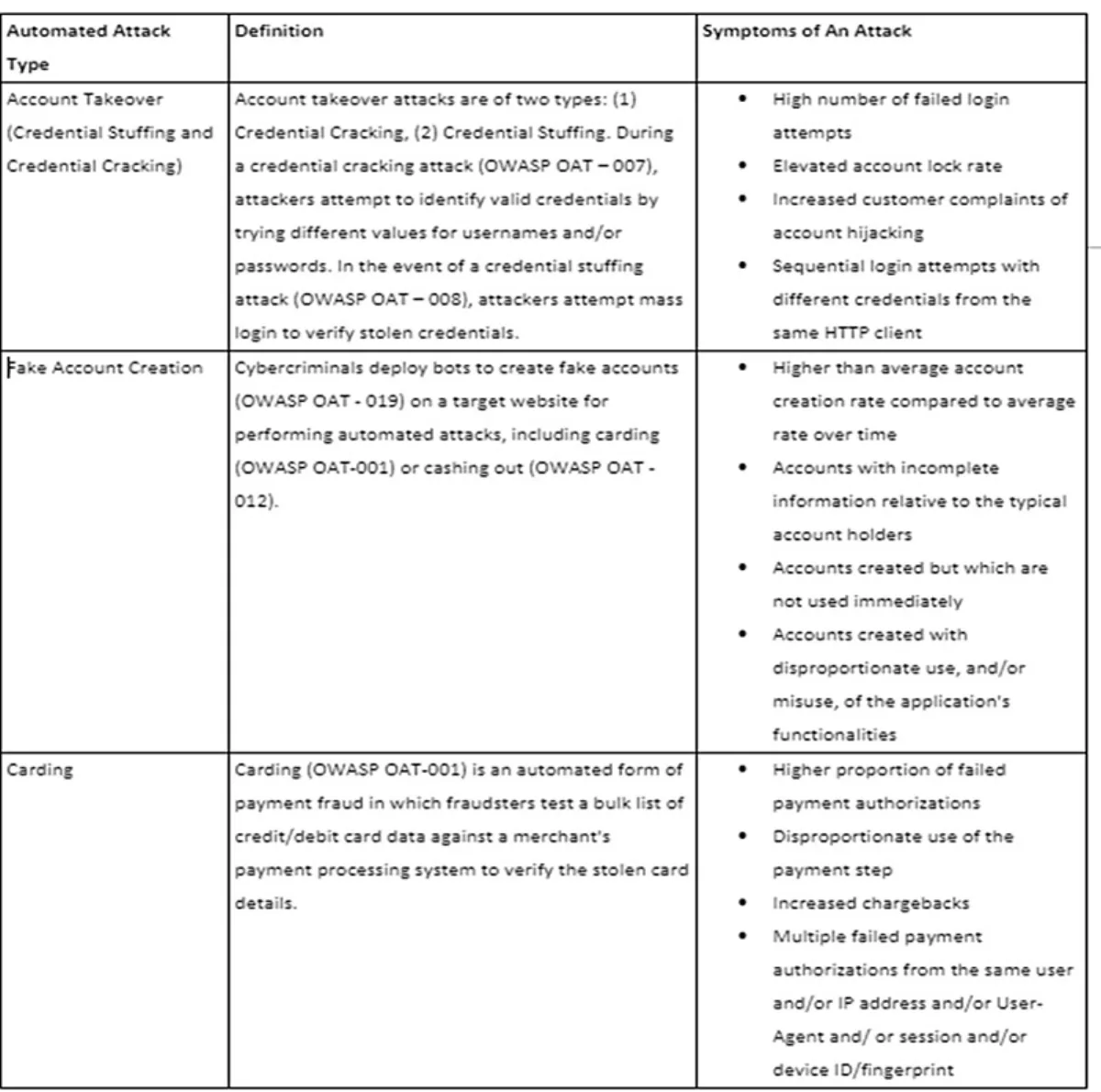

Online fraud attacks and symptoms to identify for those under attack

Per OWASP, here are the attack symptoms to watch out for:

Online fraud during the coronavirus pandemic

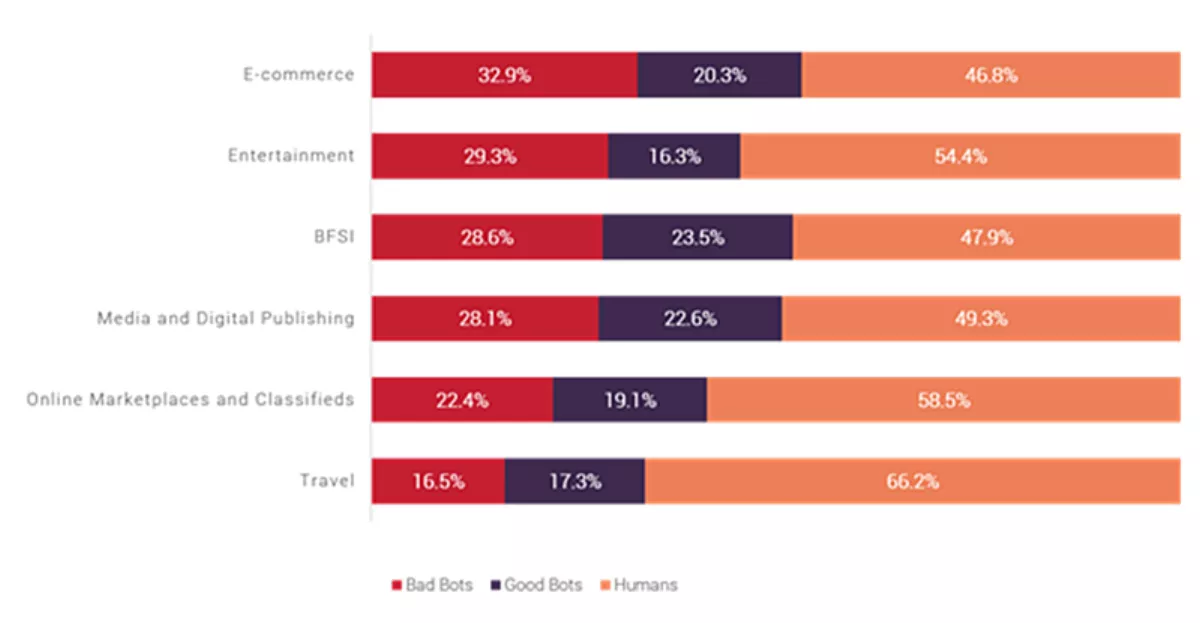

While the world struggles to find a cure for coronavirus, even healthcare organisations are under cyber attack. My company observed a spike in bot activity against eCommerce, entertainment, and BFSI in March. Cybercriminals are targeting eCommerce and financial services institutions with account takeover attacks during this pandemic.

'Traffic distribution by industry – March 2020

Online businesses need to adopt various measures to avert online fraud. Conventional security measures identify and block bots using thresholds set on traffic from recognised attack sources, for example, known botnet herders' IPs. Such approaches are ineffective in stopping bots that simulate human behaviour and shift through thousands of IPs to commit fraud.

We recommend following the action plan to spot and prevent online fraud:

- Constantly monitor traffic sources and restrict login attempts per session/user/IP address/device.

- Develop competencies to detect automated behavioural patterns of users and deploy systems that can detect the intent of automated traffic distributed across multiple sessions and sources.

- Building an accurate bot detection engine is a tightrope act. Try to eliminate false negatives and you end up with few false positives — and vice versa. Lack of historical labelled data is one of the major concerns for an accurate detection system.

- The best approach for an organisation that is trying to build an ML-powered automated bot management solution is to create a closed-loop feedback system that dynamically improves the machine-learning models based on signals collected directly from end-users.

- Monitor and restrict social media login. Ensure that users have unique passwords and educate users about password re-use to prevent credential stuffing and credential cracking attempts.